You just launched a major product update and now a bunch of your docs are out of date. Doing this kind of update work manually is tedious at best. At worst, it’s impossible.

LLMs can save you from the headaches (and inevitable oversights) involved.

In this post I’ll look at a series of small workflows that, together, update pages throughout your entire library.

Overview: three workflows, three QA tasks

I’m sharing three basic workflows that I use for three quality-assurance tasks:

- Assess the content for currency

- Assess the language for brand alignment

- Assess the copy for structure and clarity

I’m going to focus on using these workflows to update instructional content: “how-to” pages, developer documentation, product tutorials… Anything that provides detailed, prescriptive directions.

Here’s a video version of my walkthrough, if that’s more your speed 👇

The core design is pretty simple

On a fundamental level, the workflows in this post all follow the same process:

- Grab a bunch of URLs from a content library (a.k.a. website)

- Scrape the content from each page

- Analyze the content based on the rules we set

The rules we set, via AI prompts, will determine the type of quality-assurance that each flow performs.

👆I built most of these workflows n8n. You don’t need n8n to build them. You could use whichever tools fit your process best. (That might even be a series of GPT prompts.)

Each workflow outputs an analytical document. We can use their analyses to update each URLs.

These workflows are simple “linters”

The design of these LLM workflows is adapted from a DevOps practice called “linting.” Software developers use automated workflows to identify errors in code. (Errors in code are like lint on your sweater: tough to spot and good to eliminate.)

Instead of code, we’ve adapted this practice to find the “lint” in page content. By automating the practice we’re able to radically scale up content maintenance.

We can go one step farther and add generative AI workflows to help out with the page revisions and updates, too. (More on that below.)

Workflow #1: analyzing the content for currency + visibility

This workflow identifies and assesses each documentation page in order to answer these questions:

- What are the instructions that your page provides for a specific task?

- Are LLM tools recommending the same instructions and processes?

- What related questions are users asking in LLMs?

It will run this analysis for the entire docs site with a single click, returning analysis of each page.

Answering those questions enables us to…

- Revise any tutorials that are outdated

- Expand the instructional content to include related tasks

- Identify distribution and LLM visibility issues for the page overall

Let’s look at the main steps of the workflow.

Step 1: page content analysis

This step asks GPT to look at the content on each URL through the lens of a specific prompt: “What does this article explain to the user how to do?”

In other words: GPT is deciding on the subject of each docs page. We’ll use the chosen subject here to see what kind of instructional content is being offered on the same subject via LLMs.

Step 2: surveying instructions from LLM tools

Our docs page is providing specific instructions for specific tasks. We want to see how other sources are instructing people to perform those same tasks.

So we use this step to prompt an LLMs: “Without referring to the documentation, I want you to tell me how you would do the thing that's in our documentation.”

What can this information tell us? If the instructions given by the LLM are the same as those on our docs page then that’s a good indicator that our docs page is…

- Visible to LLMs (so people can find it via LLMs)

- Relevant to LLMs (because they’re using our information)

- Aligned with current best practices (because LLMs are pulling information from a variety of sources)

Step 3: comparative analysis

The workflow will now generate a spreadsheet that synthesizes all of this information in a way marketers can actually use.

The spreadsheet will:

- Identify all URLs of the docs pages we’re analyzing

- Summarize the differences between the instructions provided by the docs page versus the instructions provided independently by LLMs

This analysis will point out any variety of discrepancies in your content

- Process errors

- Omissions

- Simplifications

- Outdated terminology

This same sort of comparative analysis can be applied to more branded content as well. This level of quality-assurance isn’t really possible with manual methods alone.

Workflow #2: updating for brand message

Now that we’ve assessed the baseline accuracy and currency of the content on these pages, let’s look at it through a branding lens:

Does the language on each of these pages align with our current brand usage and priorities?

For example, let’s say that your company recently pivoted from talking about “analytics” to talking about “observability.” This workflow will scan each page on the website to flag any text that needs to be updated.

The prompt is simple and conversational:

“We want to talk about observability instead of analytics. I'm going to provide you with the URL from our website below. Return yes if this thing talks about analytics instead of observability.”

Similar to the workflow above, it will output a spreadsheet that flags every instance of the old brand language. You could adapt this workflow to review calls-to-action as well.

To recap, with workflow #1 and workflow #2 we have automated two layers of quality assurance:

- Making sure the docs instructions are accurate

- Making sure the branded language is up to date

Let’s bring in another simple flow to make sure the copy is all clean and clear.

Workflow #3: updating for clarity + style

This workflow is an extension of a sitewide spellcheck workflow I explained in another blog post.

Instead of scanning for simple typos, we can revise the prompts in the workflow to ask more sophisticated questions:

- What argument is this page putting forth?

- Is the page’s point of view supported by any evidence?

- Are the introductions and conclusions clear and concise?

- How old are the statistics cited throughout the post?

You can also apply this type of workflow to assess more strategic marketing concerns like internal linking.

Bonus workflow: automate the publishing process

So content analysis, as we’ve seen, can be very swiftly automated with an LLM workflow. And every marketer alive today has some experience with using LLMs for content generation.

But what about the transitional step between the written document and the CMS? Have you considered automating that with a workflow?

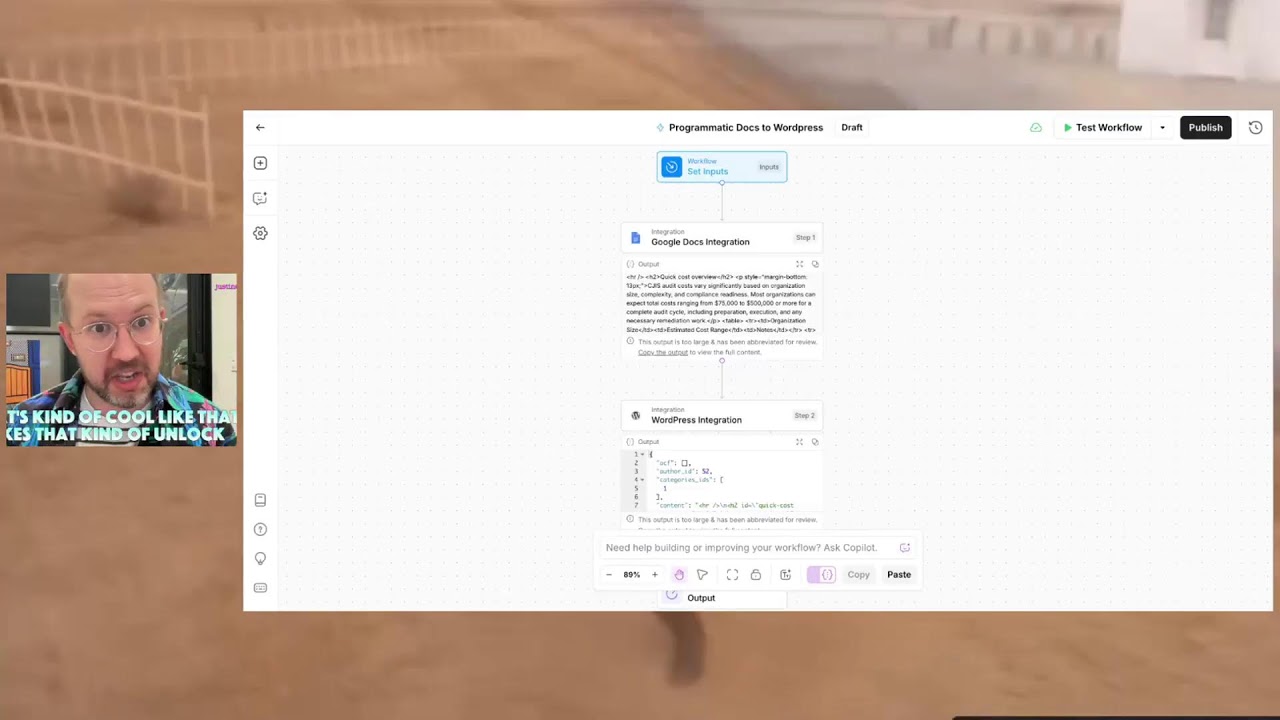

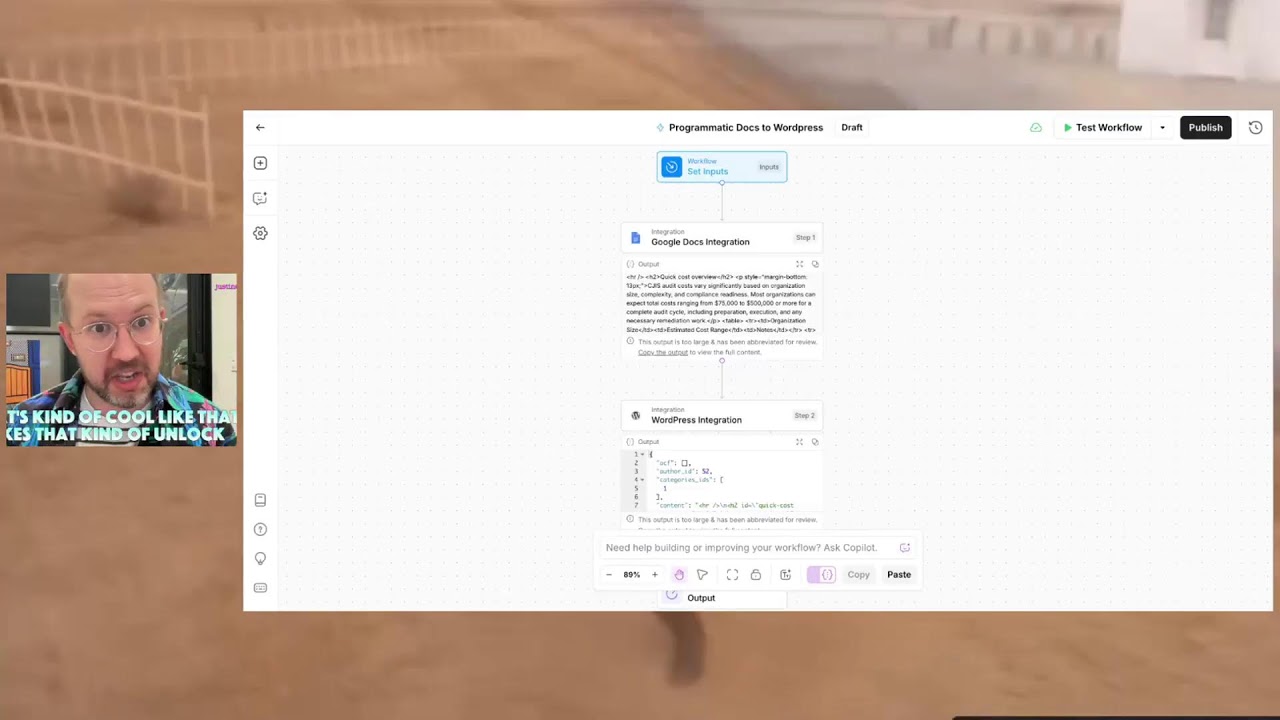

This very simple AirOps workflow will do exactly that. It grabs a Google doc and puts it into WordPress.

Simple task, right? But one that cuts out a lot of mindless, time-consuming steps in the production process.

At scale you really need stuff like this. And on a personal note: how sweet would it be to never have to look at Wordpress ever again?

A content system unifies your various subsystems

For all of the very sophisticated tasks that people are delegating to AI tools, humans are still required to coordinate and make sense of it all.

Content systems need to be engineered for specific tasks. No tool can do that better than a smart marketer can.

Content engineers build the workflows, build the AI, build the process, build the people talking to each other, hire the right people, invest the right budget, do all this stuff.

And when it’s built, we can spend more time thinking about…

- How do we design the content system to take the inputs that we have?

- What kind of investments can we make?

- Which ideas are we trying to promote?

It’s a more structured, more process driven way of scaling production and experimentation.